New Delhi: Meta-owned Instagram’s recommendation algorithms are reportedly promoting child sexual abuse content. Instagram’s algorithms linked and even promoted a vast network of pedophiles who commission and sell child sexual abuse material on the platform, researchers have found.

What Researchers Found About Instagram Algorithms

Instagram “helps connect and promote a vast network of accounts openly devoted to the commission and purchase of underage-sex content”, according to a report in The Wall Street Journal. This was revealed during a joint investigation by The Wall Street Journal and researchers at Stanford University and the University of Massachusetts Amherst.

“The Meta unit’s systems for fostering communities have guided users to child-sex content” while the social networking platform has claimed it is “improving internal controls”. Accounts found by the researchers are advertised using blatant and explicit hashtags like #pedo****, #preteensex, and #pedobait.

When researchers set up a test account and viewed content shared by these networks, they immediately recommended more accounts to follow. “Following just a handful of these recommendations was enough to flood a test account with content that sexualizes children,” the report claimed.

The Stanford investigators found “128 accounts offering to sell child-sex-abuse material on Twitter, less than a third of the number they found on Instagram”. David Thiel, the chief technologist at the Stanford Internet Observatory, was quoted as saying that one has to “put guardrails in place”.

Instagram’s recommendation algorithms linked and even promoted a “vast pedophile network” that advertised the sale of illicit “child-sex material” on the platform, according to the findings of an alarming report Wednesday.

Instagram allowed users to search by hashtags related to child-sex abuse, including graphic terms such as #pedowhore, #preteensex, #pedobait, and #mnsfw — the latter an acronym meaning “minors not safe for work,” researchers at Stanford University and the University of Massachusetts Amherst told the Wall Street Journal.

The hashtags directed users to accounts that purportedly offered to sell pedophilic materials via “menus” of content, including videos of children harming themselves or committing acts of bestiality, the researchers said.

Some accounts allowed buyers to “commission specific acts” or arrange “meet-ups,” the Journal said.

Sarah Adams, a Canadian social media influencer and activist who calls out online child exploitation, told the Journal she was affected by Instagram’s recommendation algorithm.

Adams said one of her followers flagged a distressing Instagram account in February called “incest toddlers,” which had an array of “pro-incest memes.” The mother of two said she interacted with the page only long enough to report it to Instagram.

After the brief interaction, Adams said she learned from concerned followers that Instagram had begun recommending the “incest toddlers” account to users who visited her page.

Meta confirmed to the Journal that the “incest toddler” account violated its policies.

“You have to put guardrails in place for something that growth-intensive to still be nominally safe, and Instagram hasn’t,” Thiel said.

Reacting to the report, Tesla and SpaceX CEO Elon Musk said that the findings are “extremely concerning”.

Meta Vows Action

Responding to the report, Meta said it is setting up an internal team to look into the matter. “Child exploitation is a horrific crime.

We are continuously investigating ways to actively defend against this behavior,” Meta said, according to a report by The Verge.

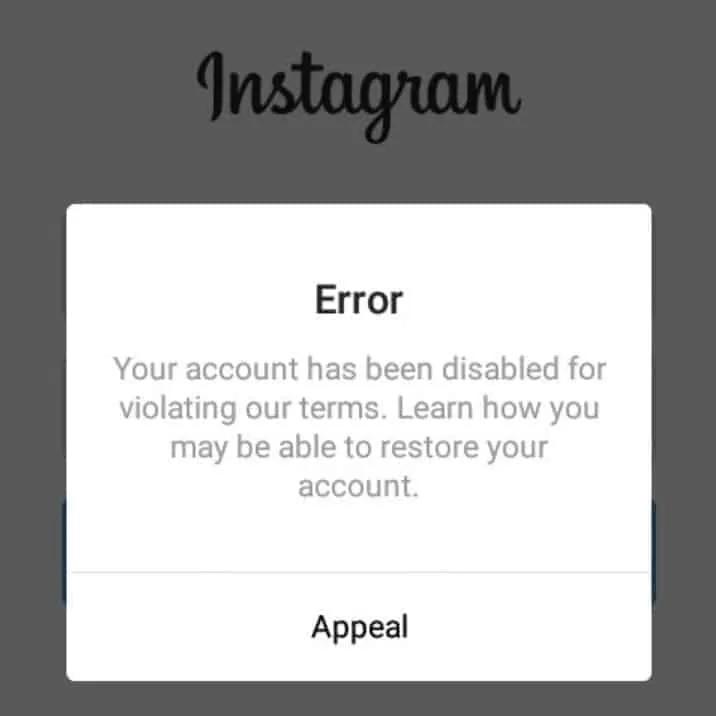

When reached for comment by The Post, a spokesperson for Instagram’s parent company, Meta, said it has since restricted the use of “thousands of additional search terms and hashtags on Instagram.

Meta pointed to its extensive enforcement efforts related to child exploitation.

The company said it disabled more than 490,000 accounts that violated its child safety policies in January and blocked more than 29,000 devices for policy violations between May 27 and June 2.

Meta also took down 27 networks that spread abusive content on its platforms from 2020 to 2022.

The Journal noted that researchers at both Stanford and UMass Amherst discovered “large-scale communities promoting criminal sex abuse” on Instagram.

Meta said it already hires specialists from law enforcement and collaborates with child safety experts to ensure its methods for combating child exploitation are up to date.

In another bizarre development, the Journal noted that Instagram’s algorithm had previously sent users a pop-up notification warning that certain searches on the platform would yield results that “may contain images of child sexual abuse.”

The screen purportedly directed users to either “get resources” on the topic or “see results anyway.”

The report said Meta disabled the option allowing users to view the results anyway, but has declined to reveal why it was ever offered in the first place.

In April, a group of law enforcement agencies that included the FBI and Interpol warned that Meta’s plans to expand end-to-end encryption on its platforms could effectively “blindfold” the company from detecting harmful content related to child sex abuse.

by: support@india.com (India.com News Desk)/ Thomas Barrabi NY Post